Implement PYI006 "bad version info comparison"

## What it does

Ensures that you only `<` and `>=` for version info comparisons with

`sys.version_info` in `.pyi` files. All other comparisons such as

`<`, `<=` and `==` are banned.

## Why is this bad?

```python

>>> import sys

>>> print(sys.version_info)

sys.version_info(major=3, minor=8, micro=10, releaselevel='final', serial=0)

>>> print(sys.version_info > (3, 8))

True

>>> print(sys.version_info == (3, 8))

False

>>> print(sys.version_info <= (3, 8))

False

>>> print(sys.version_info in (3, 8))

False

```

Co-authored-by: Charlie Marsh <charlie.r.marsh@gmail.com>

This prevents the UP034 autofix simultaneously stripping the

parentheses from generators in the same linter pass, which causes

a SyntaxError.

Closes#3234.

With this fix:

```python

$ cat test.py

the_first_one = next(

(i for i in range(10) if i // 2 == 0)

)

$ cargo run --bin ruff check test.py --no-cache --select UP034,COM812 --fix

Finished dev [unoptimized + debuginfo] target(s) in 0.08s

Running `target/debug/ruff check test.py --no-cache --select UP034,COM812 --fix`

Found 1 error (1 fixed, 0 remaining).

$ cat test.py

the_first_one = next(

i for i in range(10) if i // 2 == 0

)

```

* Use format

---------

Co-authored-by: Charlie Marsh <charlie.r.marsh@gmail.com>

Renames the following rules that stood out to me at a glance as needing better names:

- `or-true` to `expr-or-true`

- `and-false` to `expr-and-false`

- `a-or-not-a` to `expr-or-not-expr`

- `a-and-not-a` to `expr-and-not-expr`

Related to #2902.

PYI009 and PYI010 are very similar, always use `...` in function and class bodies in stubs.

PYI021 bans doc strings in stubs.

I think all of these rules should be relatively straightforward to implement auto fixes for but can do that later once we get all the other rules added.

rel: https://github.com/charliermarsh/ruff/issues/848

In ruff-lsp (https://github.com/charliermarsh/ruff-lsp/pull/76) we want to add a "Disable \<rule\> for this line" quickfix. However, finding the correct line into which the `noqa` comment should be inserted is non-trivial (multi-line strings for example).

Ruff already has this info, so expose it in the JSON output for use by ruff-lsp.

This PR enables us to apply the proper quotation marks, including support for escapes. There are some significant TODOs, especially around implicit concatenations like:

```py

(

"abc"

"def"

)

```

Which are represented as a single AST node, which requires us to tokenize _within_ the formatter to identify all the individual string parts.

I manually changed these in #3080 and #3083 to get the tests passing (with notes around the deviations) -- but that's no longer necessary, now that we have proper testing that takes deviations into account.

This just re-formats all the `.py.expect` files with Black, both to add a trailing newline and be doubly-certain that they're correctly formatted.

I also ensured that we add a hard line break after each statement, and that we avoid including an extra newline in the generated Markdown (since the code should contain the exact expected newlines).

This PR changes the testing infrastructure to run all black tests and:

* Pass if Ruff and Black generate the same formatting

* Fail and write a markdown snapshot that shows the input code, the differences between Black and Ruff, Ruffs output, and Blacks output

This is achieved by introducing a new `fixture` macro (open to better name suggestions) that "duplicates" the attributed test for every file that matches the specified glob pattern. Creating a new test for each file over having a test that iterates over all files has the advantage that you can run a single test, and that test failures indicate which case is failing.

The `fixture` macro also makes it straightforward to e.g. setup our own spec tests that test very specific formatting by creating a new folder and use insta to assert the formatted output.

I worked on #2993 and ran into issues that the formatter tests are failing on Windows because `writeln!` emits `\n` as line terminator on all platforms, but `git` on Windows converted the line endings in the snapshots to `\r\n`.

I then tried to replicate the issue on my Windows machine and was surprised that all linter snapshot tests are failing on my machine. I figured out after some time that it is due to my global git config keeping the input line endings rather than converting to `\r\n`.

Luckily, I've been made aware of #2033 which introduced an "override" for the `assert_yaml_snapshot` macro that normalizes new lines, by splitting the formatted string using the platform-specific newline character. This is a clever approach and gives nice diffs for multiline fixes but makes assumptions about the setup contributors use and requires special care whenever we use line endings inside of tests.

I recommend that we remove the special new line handling and use `.gitattributes` to enforce the use of `LF` on all platforms [guide](https://docs.github.com/en/get-started/getting-started-with-git/configuring-git-to-handle-line-endings). This gives us platform agnostic tests without having to worry about line endings in our tests or different git configurations.

## Note

It may be necessary for Windows contributors to run the following command to update the line endings of their files

```bash

git rm --cached -r .

git reset --hard

```

When creating a dict with string keys, some prefer to call dict instead of writing a dict literal.

For example: `dict(a=1, b=2, c=3)` instead of `{"a": 1, "b": 2, "c": 3}`.

This PR removes the `default_members` from the workspace configuration.

## Why

I'm not familiar with the motivation for why the `default_members` setting was added initially, and I do not object to keeping it. I'll explain my motivation for removing it below.

My main reason for removing the `default_members` override is that new contributors may not know that `cargo test`, `cargo build`, and other commands only run on a subset of crates. They may then be surprised that their PRs are failing in CI, but everything works locally.

My guess why `default_members` was added is to speed up the development workflow. That's fair, but I question the value because `ruff` is the heaviest crate to build.

This extends the autofix for TID252 to work with for relative imports without `module` (i.e. `from .. import`). Tested with `matplotlib` and `bokeh`.

(Previously it would panic on unwrap of the module)

Note that pandas has [replaced](6057d7a93e) `absolufy-imports` with `ruff` now!

# Summary

This allows users to do things like:

```py

# ruff: noqa: F401

```

...to ignore all `F401` directives in a file. It's equivalent to `per-file-ignores`, but allows users to specify the behavior inline.

Note that Flake8 does _not_ support this, so we _don't_ respect `# flake8: noqa: F401`. (Flake8 treats that as equivalent to `# flake8: noqa`, so ignores _all_ errors in the file. I think all of [these usages](https://cs.github.com/?scopeName=All+repos&scope=&q=%22%23+flake8%3A+noqa%3A+%22) are probably mistakes!)

A couple notes on the details:

- If a user has `# ruff: noqa: F401` in the file, but also `# noqa: F401` on a line that would legitimately trigger an `F401` violation, we _do_ mark that as "unused" for `RUF100` purposes. This may be the wrong choice. The `noqa` is legitimately unused, but it's also not "wrong". It's just redundant.

- If a user has `# ruff: noqa: F401`, and runs `--add-noqa`, we _won't_ add `# noqa: F401` to any lines (which seems like the obvious right choice to me).

Closes#1054 (which has some extra pieces that I'll carve out into a separate issue).

Closes#2446.

- Implement N999 (following flake8-module-naming) in pep8_naming

- Refactor pep8_naming: split rules.rs into file per rule

- Documentation for majority of the violations

Closes https://github.com/charliermarsh/ruff/issues/2734

This rule guards against `asyncio.create_task` usages of the form:

```py

asyncio.create_task(coordinator.ws_connect()) # Error

```

...which can lead to unexpected bugs due to the lack of a strong reference to the created task. See Will McGugan's blog post for reference: https://textual.textualize.io/blog/2023/02/11/the-heisenbug-lurking-in-your-async-code/.

Note that we can't detect issues like:

```py

def f():

# Stored as `task`, but never used...

task = asyncio.create_task(coordinator.ws_connect())

```

So that would be a false negative. But this catches the common case of failing to assign the task in any way.

Closes#2809.

For example:

$ ruff check --select=EM<Tab>

EM -- flake8-errmsg

EM10 EM1 --

EM101 -- raw-string-in-exception

EM102 -- f-string-in-exception

EM103 -- dot-format-in-exception

(You will need to enable autocompletion as described

in the Autocompletion section in the README.)

Fixes#2808.

(The --help help change in the README is due to a clap bug,

for which I already submitted a fix:

https://github.com/clap-rs/clap/pull/4710.)

# Summary

This PR contains the code for the autoformatter proof-of-concept.

## Crate structure

The primary formatting hook is the `fmt` function in `crates/ruff_python_formatter/src/lib.rs`.

The current formatter approach is outlined in `crates/ruff_python_formatter/src/lib.rs`, and is structured as follows:

- Tokenize the code using the RustPython lexer.

- In `crates/ruff_python_formatter/src/trivia.rs`, extract a variety of trivia tokens from the token stream. These include comments, trailing commas, and empty lines.

- Generate the AST via the RustPython parser.

- In `crates/ruff_python_formatter/src/cst.rs`, convert the AST to a CST structure. As of now, the CST is nearly identical to the AST, except that every node gets a `trivia` vector. But we might want to modify it further.

- In `crates/ruff_python_formatter/src/attachment.rs`, attach each trivia token to the corresponding CST node. The logic for this is mostly in `decorate_trivia` and is ported almost directly from Prettier (given each token, find its preceding, following, and enclosing nodes, then attach the token to the appropriate node in a second pass).

- In `crates/ruff_python_formatter/src/newlines.rs`, normalize newlines to match Black’s preferences. This involves traversing the CST and inserting or removing `TriviaToken` values as we go.

- Call `format!` on the CST, which delegates to type-specific formatter implementations (e.g., `crates/ruff_python_formatter/src/format/stmt.rs` for `Stmt` nodes, and similar for `Expr` nodes; the others are trivial). Those type-specific implementations delegate to kind-specific functions (e.g., `format_func_def`).

## Testing and iteration

The formatter is being developed against the Black test suite, which was copied over in-full to `crates/ruff_python_formatter/resources/test/fixtures/black`.

The Black fixtures had to be modified to create `[insta](https://github.com/mitsuhiko/insta)`-compatible snapshots, which now exist in the repo.

My approach thus far has been to try and improve coverage by tackling fixtures one-by-one.

## What works, and what doesn’t

- *Most* nodes are supported at a basic level (though there are a few stragglers at time of writing, like `StmtKind::Try`).

- Newlines are properly preserved in most cases.

- Magic trailing commas are properly preserved in some (but not all) cases.

- Trivial leading and trailing standalone comments mostly work (although maybe not at the end of a file).

- Inline comments, and comments within expressions, often don’t work -- they work in a few cases, but it’s one-off right now. (We’re probably associating them with the “right” nodes more often than we are actually rendering them in the right place.)

- We don’t properly normalize string quotes. (At present, we just repeat any constants verbatim.)

- We’re mishandling a bunch of wrapping cases (if we treat Black as the reference implementation). Here are a few examples (demonstrating Black's stable behavior):

```py

# In some cases, if the end expression is "self-closing" (functions,

# lists, dictionaries, sets, subscript accesses, and any length-two

# boolean operations that end in these elments), Black

# will wrap like this...

if some_expression and f(

b,

c,

d,

):

pass

# ...whereas we do this:

if (

some_expression

and f(

b,

c,

d,

)

):

pass

# If function arguments can fit on a single line, then Black will

# format them like this, rather than exploding them vertically.

if f(

a, b, c, d, e, f, g, ...

):

pass

```

- We don’t properly preserve parentheses in all cases. Black preserves parentheses in some but not all cases.

This PR removes the dependency on `ruff_rowan` (i.e., Rome's fork of rust-analyzer's `rowan`), and in turn, trims out a lot of code in `ruff_formatter` that isn't necessary (or isn't _yet_ necessary) to power the autoformatter.

We may end up pulling some of this back in -- TBD. For example, the autoformatter has its own comment representation right now, but we may eventually want to use the `comments.rs` data structures defined in `rome_formatter`.

Given our current parser abstractions, we need the ability to tell `ruff_formatter` to print a pre-defined slice from a fixed string of source code, which we've introduced here as `FormatElement::StaticTextSlice`.

The Ruff autoformatter is going to be based on an intermediate representation (IR) formatted via [Wadler's algorithm](https://homepages.inf.ed.ac.uk/wadler/papers/prettier/prettier.pdf). This is architecturally similar to [Rome](https://github.com/rome/tools), Prettier, [Skip](https://github.com/skiplang/skip/blob/master/src/tools/printer/printer.sk), and others.

This PR adds a fork of the `rome_formatter` crate from [Rome](https://github.com/rome/tools), renamed here to `ruff_formatter`, which provides generic definitions for a formatter IR as well as a generic IR printer. (We've also pulled in `rome_rowan`, `rome_text_size`, and `rome_text_edit`, though some of these will be removed in future PRs.)

Why fork? `rome_formatter` contains code that's specific to Rome's AST representation (e.g., it relies on a fork of rust-analyzer's `rowan`), and we'll likely want to support different abstractions and formatting capabilities (there are already a few changes coming in future PRs). Once we've dropped `ruff_rowan` and trimmed down `ruff_formatter` to the code we currently need, it's also not a huge surface area to maintain and update.

In 28c9263722 I introduced automatic

linkification of option references in rule documentation,

which automatically converted the following:

## Options

* `namespace-packages`

to:

## Options

* [`namespace-packages`]

[`namespace-packages`]: ../../settings#namespace-packages

While the above is a correct CommonMark[1] link definition,

what I was missing was that we used mkdocs for our documentation

generation, which as it turns out uses a non-CommonMark-compliant

Markdown parser, namely Python-Markdown, which contrary to CommonMark

doesn't support link definitions containing code tags.

This commit fixes the broken links via a regex hack.

[1]: https://commonmark.org/

Currently the define_rule_mapping! macro generates both the Rule enum as

well as the RuleCodePrefix enum and the mapping between the two. After

this commit series the macro will only generate the Rule enum and the

RuleCodePrefix enum and the mapping will be generated by a new map_codes

proc macro, so we rename the macro now to fit its new purpose.

Same reasoning as for the previous commit ... one &'static str

becomes two &'static str because we split the RuleCodePrefix enum.

Note that the .unwrap() we have to add now, will actually

be removed in the 6th commit.

Rule::noqa_code previously return a single &'static str,

which was possible because we had one enum listing all

rule code prefixes. This commit series will however split up

the RuleCodePrefix enum into several enums ... so we'll end up

with two &'static str ... this commit wraps the return type

of Rule::noqa_code into a newtype so that we can easily change

it to return two &'static str in the 6th commit of this series.

Post this commit series several codes can be mapped to a single rule,

this commit therefore renames Rule::code to Rule::noqa_code,

which is the code that --add-noqa will add to ignore a rule.

Previously the rule documentation referenced configuration options

via full https:// URLs, which was bad for several reasons:

* changing the website would mean you'd have to change all URLs

* the links didn't work when building mkdocs locally

* the URLs showed up in the `ruff rule` output

* broken references weren't detected by our CI

This commit solves all of these problems by post-processing the

Markdown, recognizing sections such as:

## Options

* `flake8-tidy-imports.ban-relative-imports`

`cargo dev generate-all` will automatically linkify such references

and panic if the referenced option doesn't exist.

Note that the option can also be linked in the other Markdown sections

via e.g. [`flake8-tidy-imports.ban-relative-imports`] since

the post-processing code generates a CommonMark link definition.

Resolves#2766.

The motivating issue here is of the following form:

```py

try:

raise Exception("We want to hide this error message")

except Exception:

try:

raise Exception("We want to show this")

except Exception as exc:

raise exc from None

```

However, I think we should avoid this if _any_ cause is present, since causes require a named exception.

Closes#2814.

The synopsis is as follows.

List all top-level config keys:

$ ruff config

allowed-confusables

builtins

cache-dir

... etc.

List all config keys in a specific section:

$ ruff config mccabe

max-complexity

Describe a specific config option:

$ ruff config mccabe.max-complexity

The maximum McCabe complexity to allow before triggering `C901` errors.

Default value: 10

Type: int

Example usage:

```toml

# Flag errors (`C901`) whenever the complexity level exceeds 5.

max-complexity = 5

```

The new `ruff rule` output format introduced in

551b810aeb doesn't print Markdown but

rather some rich text with escape sequences for colors and links,

it's actually the "text" format that prints Markdown, so naming the new

format "markdown" is very confusing. This commit therefore renames it to

"pretty".

This isn't a breaking change since there hasn't been a release yet.

This was just an oversight and misunderstanding on my part. We had some helpful tests, but I misunderstood the "right" behavior so thought they were passing.

Closes#2761.

if_all_same(codes.values().cloned()).unwrap_or_default()

was quite unreadable because it wasn't obvious that codes.values() are

the prefixes. It's better to introduce another Map rather than having

Maps within Maps.

It's not only `.pyi` that should be exempt for this, but also for example scripts which don't have an extension, explicitly passed in command line args.

```console

❯ cargo run rule B017

Finished dev [unoptimized + debuginfo] target(s) in 0.13s

Running `target/debug/ruff rule B017`

no-assert-raises-exception

Code: B017 (flake8-bugbear)

### What it does

Checks for `self.assertRaises(Exception)`.

## Why is this bad?

`assertRaises(Exception)` can lead to your test passing even if the

code being tested is never executed due to a typo.

Either assert for a more specific exception (builtin or custom), use

`assertRaisesRegex` or the context manager form of `assertRaises`.

```

This PR adds a configuration option to inhibit ANN* violations for functions that have no other annotations either, for easier gradual typing of a large codebase.

Along with the logical line detection, this adds 14 of the missing `pycodestyle` rules.

For now, this is all gated behind a `logical_lines` feature that's off-by-default, which will let us implement all rules prior to shipping, since we want to couple the release of these rules with new defaults and instructions.

Prefer the version from a currently active virtualenv over a version

from `pip install --user`. Add the .exe extension on Windows, and

find the path for `pip install --user` correctly on Windows.

Signed-off-by: Anders Kaseorg <andersk@mit.edu>

LinterCategory was somewhat misnamed since it's not actually

a category for linters but rather a category for upstream lints.

Since we want to introduce our own categories, naming the type

UpstreamCategory is more clear.

Our existing solution was having trouble with parenthesized expressions. This actually may affect more than `SIM300`, but let's address them as they come up.

Closes#2466.

The downside here is that we have to leave blank `# noqa` directives intact. Otherwise, we risk removing necessary `# noqa` coverage for rules that aren't selected.

Closes#2254.

Previously, if you passed in a file on the command-line via `--config`, it had to be named either `pyproject.toml` or `ruff.toml` -- otherwise, we errored. I think this is too strict. `pyproject.toml` is a special name in the ecosystem, so we should require _that_; but otherwise, let's just assume it's in `ruff.toml` format.

As an alternative, we could add a `--pyproject` argument for `pyproject.toml`, and assume anything passed to `--config` is in `ruff.toml` format. But that _would_ be a breaking change and is arguably more confusing. (This isn't a breaking change, since it only loosens the CLI.)

Closes#2462.

I moved the `self.in_annotation` guard out of the version check in #1563. But, I think that was a mistake. It was done to resolve#1560, but the fix in that case _should've_ been to set a different Python version.

Closes#2447.

As an example, if you have `single` as your preferred style, we'll now allow this:

```py

assert s.to_python(123) == (

"123 info=SerializationInfo(include=None, exclude=None, mode='python', by_alias=True, exclude_unset=False, "

"exclude_defaults=False, exclude_none=False, round_trip=False)"

)

```

Previously, the second line of the implicit string concatenation would be flagged as invalid, despite the _first_ line requiring double quotes. (Note that we'll accept either single or double quotes for that second line.)

Mechanically, this required that we process sequences of `Tok::String` rather than a single `Tok::String` at a time. Prior to iterating over the strings in the sequence, we check if any of them require the non-preferred quote style; if so, we let _any_ of them use it.

Closes#2400.

In order to avoid confusing new developers. When a debug build panics

chances are that the panic is caused by local changes and should in

fact not be reported on GitHub.

RuleSelector implemented PartialOrd & Ord because ruff::flake8_to_ruff

was using RuleSelector within a BTreeSet (which requires contained

elements to implement Ord). There however is no inherent order to

rule selectors, so PartialOrd & Ord should not be implemented.

This commit changes BTreeSet<RuleSelector> to HashSet<RuleSelector>

and adds an explicit sort calls based on the serialized strings,

letting us drop the PartialOrd & Ord impls in favor of a Hash impl.

This is a followup to #2361. The isort check still had an issue in a rather specific case: files with a multiline import, indented with tabs, and not containing any indented blocks.

The root cause is this: [`Stylist`'s indentation detection](ad8693e3de/src/source_code/stylist.rs (L163-L172)) works by finding `Indent` tokens to determine the type of indentation used by a file. This works for indented code blocks (loops/classes/functions/etc) but does not work for multiline values, so falls back to 4 spaces if the file doesn't contain code blocks.

I considered a few possible solutions:

1. Fix `detect_indentation` to avoid tokenizing and instead use some other heuristic to determine indentation. This would have the benefit of working in other places where this is potentially an issue, but would still fail if the file doesn't contain any indentation at all, and would need to fall back to option 2 anyways.

2. Add an option for specifying the default indentation in Ruff's config. I think this would confusing, since it wouldn't affect the detection behavior and only operate as a fallback, has no other current application and would probably end up being overloaded for other things.

3. Relax the isort check by comparing the expected and actual code's lexed tokens. This would require an additional lexing step.

4. Relax the isort check by comparing the expected and actual code modulo whitespace at the start of lines.

This PR does approach 4, which in addition to being the simplest option, has the (expected, although I didn't benchmark) added benefit of improved performance, since the check no longer needs to do two allocations for the two `dedent` calls. I also believe that the check is still correct enough for all practical purposes.

This is another temporary fix for the problem described in #2289 and #2292. Rather than merely warning, we now disable the incompatible rules (in addition to the warning). I actually think this is quite a reasonable solution, but we can revisit later. I just can't bring myself to ship another release with autofix broken-by-default 😂

If `allow-multiline = false` is set, then if the user enables `explicit-string-concatenation` (`ISC003`), there's no way for them to create valid multiline strings. This PR notes that they should turn off `ISC003`.

Closes#2362.

We now only trigger `logging-exc-info` and `logging-redundant-exc-info` when in an exception handler, with an `exc_info` that isn't `true` or `sys.exc_info()`.

Closes#2356.

Ruff allows rules to be enabled with `select` and disabled with

`ignore`, where the more specific rule selector takes precedence,

for example:

`--select ALL --ignore E501` selects all rules except E501

`--ignore ALL --select E501` selects only E501

(If both selectors have the same specificity ignore selectors

take precedence.)

Ruff always had two quirks:

* If `pyproject.toml` specified `ignore = ["E501"]` then you could

previously not override that with `--select E501` on the command-line

(since the resolution didn't take into account that the select was

specified after the ignore).

* If `pyproject.toml` specified `select = ["E501"]` then you could

previously not override that with `--ignore E` on the command-line

(since the resolution didn't take into account that the ignore was

specified after the select).

Since d067efe265 (#1245)

`extend-select` and `extend-ignore` always override

`select` and `ignore` and are applied iteratively in pairs,

which introduced another quirk:

* If some `pyproject.toml` file specified `extend-select`

or `extend-ignore`, `select` and `ignore` became pretty much

unreliable after that with no way of resetting that.

This commit fixes all of these quirks by making later configuration

sources take precedence over earlier configuration sources.

While this is a breaking change, we expect most ruff configuration

files to not rely on the previous unintutive behavior.

Previously we tested the resolve_codes helper function directly.

Since we want to rewrite our resolution logic in the next commit,

this commit changes the tests to test the more high-level From impl.

This PR fixes two related issues with using isort on files using tabs for indentation:

- Multiline imports are never considered correctly formatted, since the comparison with the generated code will always fail.

- Using autofix generates code that can have mixed indentation in the same line, for imports that are within nested blocks.

Previously Linter::parse_code("E401") returned

(Linter::Pycodestyle, "401") ... after this commit it returns

(Linter::Pycodestyle, "E401") instead, which is important

for the future implementation of the many-to-many mapping.

(The second value of the tuple isn't used currently.)

Fairly mechanical. Did a few of the simple cases manually to make sure things were working, and I think the rest will be easily achievable via a quick `fastmod` command.

ref #1871

I think we've never run into this case, since it's rare to import `*` from a module _and_ import some other member explicitly. But we were deviating from `isort` by placing the `*` after other members, rather than up-top.

Closes#2318.

`RUF100` does not take into account a rule ignored for a file via a `per-file-ignores` configuration. To see this, try the following pyproject.toml:

```toml

[tool.ruff.per-file-ignores]

"test.py" = ["F401"]

```

and this test.py file:

```python

import itertools # noqa: F401

```

Running `ruff --extend-select RUF100 test.py`, we should expect to get this error:

```

test.py:1:19: RUF100 Unused `noqa` directive (unused: `F401`)

```

The issue is that the per-file-ignores diagnostics are filtered out after the noqa checks, rather than before.

Fixes a regression introduced in eda2be6350 (but not yet released to users). (`-v` is a real flag, but it's an alias for `--verbose`, not `--version`.)

Closes#2299.

We probably want to introduce multiple explain subcommands and

overloading `explain` to explain it all seems like a bad idea.

We may want to introduce a subcommand to explain config options and

config options may end up having the same name as their rules, e.g. the

current `banned-api` is both a rule name (although not yet exposed to

the user) and a config option.

The idea is:

* `ruff rule` lists all rules supported by ruff

* `ruff rule <code>` explains a specific rule

* `ruff linter` lists all linters supported by ruff

* `ruff linter <name>` lists all rules/options supported by a specific linter

(After this commit only the 2nd case is implemented.)

This commit greatly simplifies the implementation of the CLI,

as well as the user expierence (since --help no longer lists all

options even though many of them are in fact incompatible).

To preserve backwards-compatability as much as possible aliases have

been added for the new subcommands, so for example the following two

commands are equivalent:

ruff explain E402 --format json

ruff --explain E402 --format json

However for this to work the legacy-format double-dash command has to

come first, i.e. the following no longer works:

ruff --format json --explain E402

Since ruff previously had an implicitly default subcommand,

this is preserved for backwards compatibility, i.e. the following two

commands are equivalent:

ruff .

ruff check .

Previously ruff didn't complain about several argument combinations that

should have never been allowed, e.g:

ruff --explain RUF001 --line-length 33

previously worked but now rightfully fails since the explain command

doesn't support a `--line-length` option.

I presume the reasoning for not including clippy in `pre-commit` was that it passes all files. This can be turned off with `pass_filenames`, in which case it only runs once.

`cargo +nightly dev generate-all` is also added (when excluding `target` is does not give false positives).

(The overhead of these commands is not much when the build is there. People can always choose to run only certain hooks with `pre-commit run [hook] --all-files`)

Accessed attributes that are Python constants should be considered for yoda-conditions

```py

## Error

JediOrder.YODA == age # SIM300

## OK

age == JediOrder.YODA

```

~~PS: This PR will fail CI, as the `main` branch currently failing.~~

SIM300 currently doesn't take Python constants into account when looking for Yoda conditions, this PR fixes that behavior.

```python

# Errors

YODA == age # SIM300

YODA > age # SIM300

YODA >= age # SIM300

# OK

age == YODA

age < YODA

age <= YODA

```

Ref: <https://github.com/home-assistant/core/pull/86793>

This isn't super consistent with some other rules, but... if you have a lone body, with a `pass`, followed by a comment, it's probably surprising if it gets removed. Let's retain the comment.

Closes#2231.

Fixes a regression introduced in 4e4643aa5d.

We want the longest prefixes to be checked first so we of course

have to reverse the sorting when sorting by prefix length.

Fixes#2210.

`ruff --help` previously listed 37 options in no particular order

(with niche options like --isolated being listed before before essential

options such as --select). This commit remedies that and additionally

groups the options by making use of the Clap help_heading feature.

Note that while the source code has previously also referred to

--add-noqa, --show-settings, and --show-files as "subcommands"

this commit intentionally does not list them under the new

Subcommands section since contrary to --explain and --clean

combining them with most of the other options makes sense.

These were split into per-project licenses in #1648, but I don't like that they're no longer included in the distribution (due to current limitations in the `pyproject.toml` spec).

After this change:

```shell

> time cargo run -- -n $(find ../django -type f -name '*.py')`

8.85s user 0.20s system 498% cpu 1.814 total

> time cargo run -- -n ../django

8.95s user 0.23s system 507% cpu 1.811 total

```

I also verified that we only hit the creation path once via some manual logging.

Closes#2154.

We already enforced pedantic clippy lints via the

following command in .github/workflows/ci.yaml:

cargo clippy --workspace --all-targets --all-features -- -D warnings -W clippy::pedantic

Additionally adding #![warn(clippy::pedantic)] to all main.rs and lib.rs

has the benefit that violations of pedantic clippy lints are also

reported when just running `cargo clippy` without any arguments and

are thereby also picked up by LSP[1] servers such as rust-analyzer[2].

However for rust-analyzer to run clippy you'll have to configure:

"rust-analyzer.check.command": "clippy",

in your editor.[3]

[1]: https://microsoft.github.io/language-server-protocol/

[2]: https://rust-analyzer.github.io/

[3]: https://rust-analyzer.github.io/manual.html#configuration

From discussion on https://github.com/charliermarsh/ruff/pull/2123

I didn't originally have a helpers file so I put the function in both

places but now that a helpers file exists it seems logical for it to be

there.

This commit removes rule redirects such as ("U" -> "UP") from the

RuleCodePrefix enum because they complicated the generation of that enum

(which we want to change to be prefix-agnostic in the future).

To preserve backwards compatibility redirects are now resolved

before the strum-generated RuleCodePrefix::from_str is invoked.

This change also brings two other advantages:

* Redirects are now only defined once

(previously they had to be defined twice:

once in ruff_macros/src/rule_code_prefix.rs

and a second time in src/registry.rs).

* The deprecated redirects will no longer be suggested in IDE

autocompletion within pyproject.toml since they are now no

longer part of the ruff.schema.json.

Yet another refactor to let us implement the many-to-many mapping

between codes and rules in a prefix-agnostic way.

We want to break up the RuleCodePrefix[1] enum into smaller enums.

To facilitate that this commit introduces a new wrapping type around

RuleCodePrefix so that we can start breaking it apart.

[1]: Actually `RuleCodePrefix` is the previous name of the autogenerated

enum ... I renamed it in b19258a243 to

RuleSelector since `ALL` isn't a prefix. This commit now renames it back

but only because the new `RuleSelector` wrapper type, introduced in this

commit, will let us move the `ALL` variant from `RuleCodePrefix` to

`RuleSelector` in the next commit.

At present, `ISC001` and `ISC002` flag concatenations like the following:

```py

"a" "b" # ISC001

"a" \

"b" # ISC002

```

However, multiline concatenations are allowed.

This PR adds a setting:

```toml

[tool.ruff.flake8-implicit-str-concat]

allow-multiline = false

```

Which extends `ISC002` to _also_ flag multiline concatenations, like:

```py

(

"a" # ISC002

"b"

)

```

Note that this is backwards compatible, as `allow-multiline` defaults to `true`.

Ruff supports more than `known-first-party`, `known-third-party`, `extra-standard-library`, and `src` nowadays.

Not sure if this is the best wording. Suggestions welcome!

Extend test fixture to verify the targeting.

Includes two "attribute docstrings" which per PEP 257 are not recognized by the Python bytecode compiler or available as runtime object attributes. They are not available for us either at time of writing, but include them for completeness anyway in case they one day are.

To enable ruff_dev to automatically generate the rule Markdown tables in

the README the ruff library contained the following function:

Linter::codes() -> Vec<RuleSelector>

which was slightly changed to `fn prefixes(&self) -> Prefixes` in

9dc66b5a65 to enable ruff_dev to split

up the Markdown tables for linters that have multiple prefixes

(pycodestyle has E & W, Pylint has PLC, PLE, PLR & PLW).

The definition of this method was however largely redundant with the

#[prefix] macro attributes in the Linter enum, which are used to

derive the Linter::parse_code function, used by the --explain command.

This commit removes the redundant Linter::prefixes by introducing a

same-named method with a different signature to the RuleNamespace trait:

fn prefixes(&self) -> &'static [&'static str];

As well as implementing IntoIterator<Rule> for &Linter. We extend the

extisting RuleNamespace proc macro to automatically derive both

implementations from the Linter enum definition.

To support the previously mentioned Markdown table splitting we

introduce a very simple hand-written method to the Linter impl:

fn categories(&self) -> Option<&'static [LinterCategory]>;

ParseCode was a fitting name since the trait only contained a single

parse_code method ... since we now however want to introduce an

additional `prefixes` method RuleNamespace is more fitting.

Using Ident as the key type is inconvenient since creating an Ident

requires the specification of a Span, which isn't actually used by

the Hash implementation of Ident.

If a file doesn't have a `package`, then it must both be in a directory that lacks an `__init__.py`, and a directory that _isn't_ marked as a namespace package.

Closes#2075.

- optional `prefix` argument for `add_plugin.py`

- rules directory instead of `rules.rs`

- pathlib syntax

- fix test case where code was added instead of name

Example:

```

python scripts/add_plugin.py --url https://pypi.org/project/example/1.0.0/ example --prefix EXA

python scripts/add_rule.py --name SecondRule --code EXA002 --linter example

python scripts/add_rule.py --name FirstRule --code EXA001 --linter example

python scripts/add_rule.py --name ThirdRule --code EXA003 --linter example

```

Note that it breaks compatibility with 'old style' plugins (generation works fine, but namespaces need to be changed):

```

python scripts/add_rule.py --name DoTheThing --code PLC999 --linter pylint

```

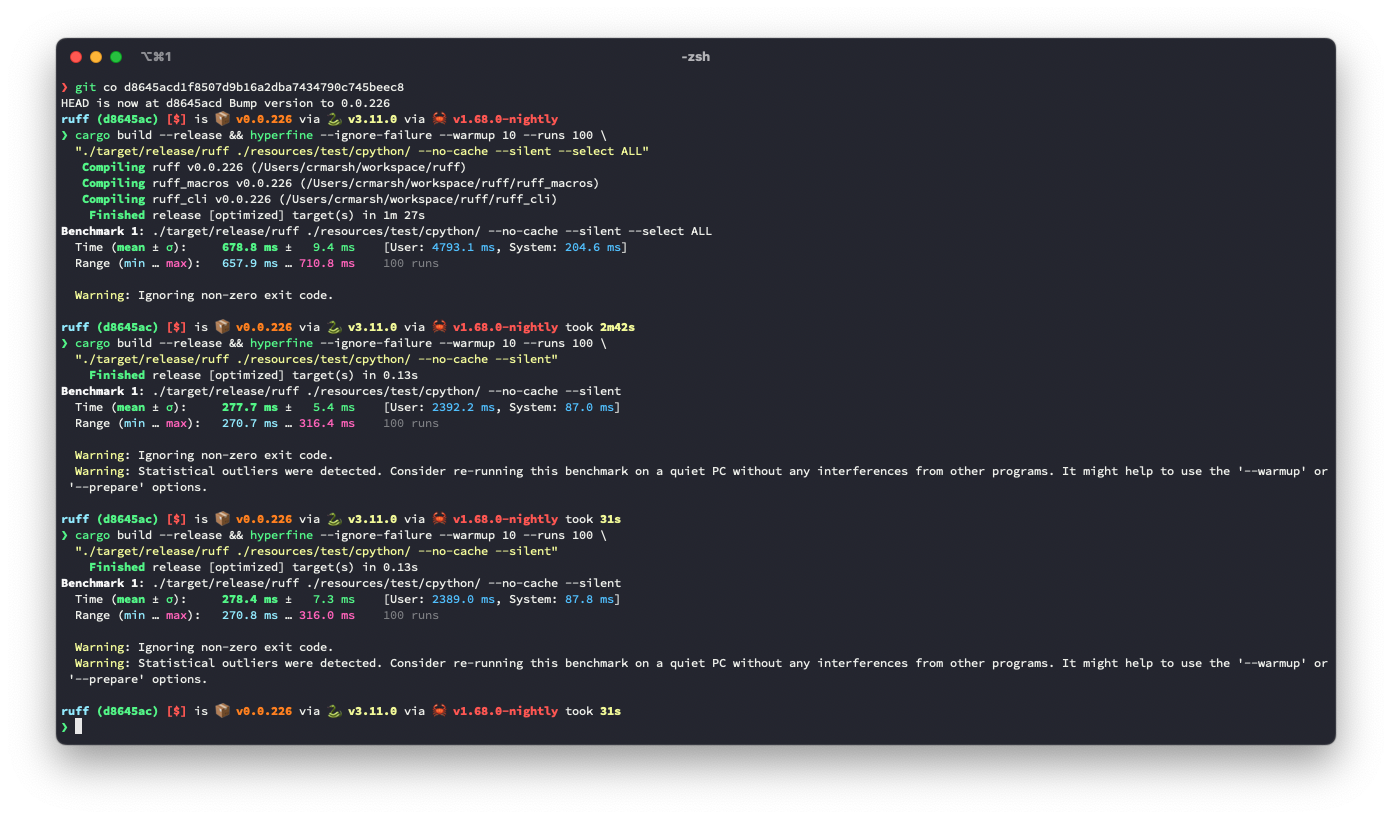

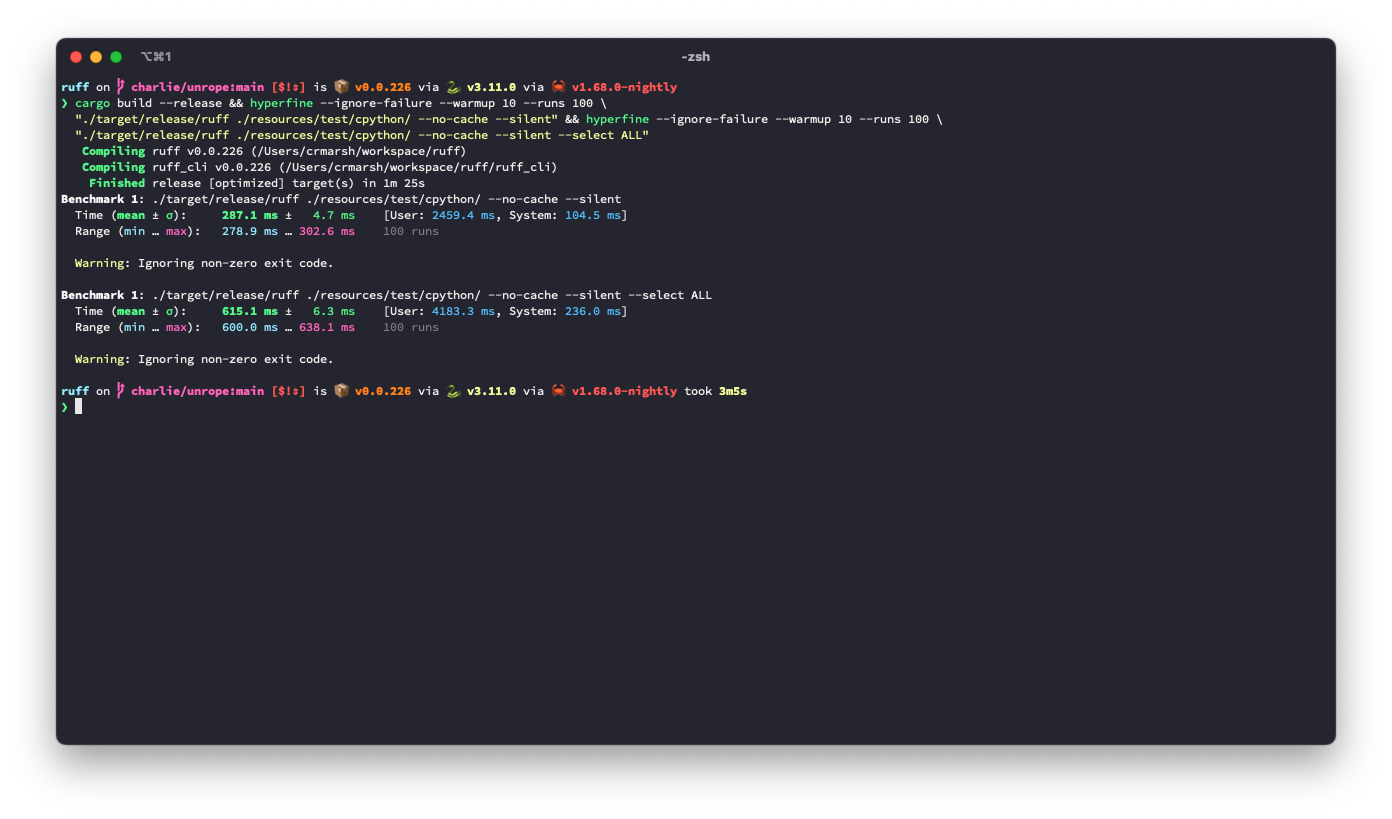

## Summary

The problem: given a (row, column) number (e.g., for a token in the AST), we need to be able to map it to a precise byte index in the source code. A while ago, we moved to `ropey` for this, since it was faster in practice (mostly, I think, because it's able to defer indexing). However, at some threshold of accesses, it becomes faster to index the string in advance, as we're doing here.

## Benchmark

It looks like this is ~3.6% slower for the default rule set, but ~9.3% faster for `--select ALL`.

**I suspect there's a strategy that would be strictly faster in both cases**, based on deferring even more computation (right now, we lazily compute these offsets, but we do it for the entire file at once, even if we only need some slice at the top), or caching the `ropey` lookups in some way.

Before:

After:

## Alternatives

I tried tweaking the `Vec::with_capacity` hints, and even trying `Vec::with_capacity(str_indices::lines_crlf::count_breaks(contents))` to do a quick scan of the number of lines, but that turned out to be slower.

Add tests.

Ensure that these cases are caught by ICN001:

```python

from xml.dom import minidom

from xml.dom.minidom import parseString

```

with config:

```toml

[tool.ruff.flake8-import-conventions.extend-aliases]

"dask.dataframe" = "dd"

"xml.dom.minidom" = "md"

"xml.dom.minidom.parseString" = "pstr"

```

This _did_ fix https://github.com/charliermarsh/ruff/issues/1894, but was a little premature. `toml` doesn't actually depend on `toml-edit` yet, and `v0.5.11` was mostly about deprecations AFAICT. So upgrading might solve that issue, but could introduce other incompatibilities, and I'd like to minimize churn. I expect that `toml` will have a new release soon, so we can revert this revert.

Reverts charliermarsh/ruff#2040.

The idea is the same as #1867. Avoids emitting `SIM102` twice for the following code:

```python

if a:

if b:

if c:

d

```

```

resources/test/fixtures/flake8_simplify/SIM102.py:1:1: SIM102 Use a single `if` statement instead of nested `if` statements

resources/test/fixtures/flake8_simplify/SIM102.py:2:5: SIM102 Use a single `if` statement instead of nested `if` statements

```

This PR adds the scaffolding files for `flake8-type-checking`, along with the simplest rule (`empty-type-checking-block`), just as an example to get us started.

See: #1785.

543865c96b introduced

RuleCode::origin() -> RuleOrigin generation via a macro, while that

signature now has been renamed to Rule::origin() -> Linter we actually

want to get rid of it since rules and linters shouldn't be this tightly

coupled (since one rule can exist in multiple linters).

Another disadvantage of the previous approach was that the prefixes

had to be defined in ruff_macros/src/prefixes.rs, which was easy to

miss when defining new linters in src/*, case in point

INP001 => violations::ImplicitNamespacePackage has in the meantime been

added without ruff_macros/src/prefixes.rs being updated accordingly

which resulted in `ruff --explain INP001` mistakenly reporting that the

rule belongs to isort (since INP001 starts with the isort prefix "I").

The derive proc macro introduced in this commit requires every variant

to have at least one #[prefix = "..."], eliminating such mistakes.

More accurate since the enum also encompasses:

* ALL (which isn't a prefix at all)

* fully-qualified rule codes (which aren't prefixes unless you say

they're a prefix to the empty string but that's not intuitive)

"origin" was accurate since ruff rules are currently always modeled

after one origin (except the Ruff-specific rules).

Since we however want to introduce a many-to-many mapping between codes

and rules, the term "origin" no longer makes much sense. Rules usually

don't have multiple origins but one linter implements a rule first and

then others implement it later (often inspired from another linter).

But we don't actually care much about where a rule originates from when

mapping multiple rule codes to one rule implementation, so renaming

RuleOrigin to Linter is less confusing with the many-to-many system.

Tracking issue: https://github.com/charliermarsh/ruff/issues/2024

Implementation for EXE003, EXE004 and EXE005 of `flake8-executable`

(shebang should contain "python", not have whitespace before, and should be on the first line)

Please take in mind that this is my first rust contribution.

The remaining EXE-rules are a combination of shebang (`lines.rs`), file permissions (`fs.rs`) and if-conditions (`ast.rs`). I was not able to find other rules that have interactions/dependencies in them. Any advice on how this can be best implemented would be very welcome.

For autofixing `EXE005`, I had in mind to _move_ the shebang line to the top op the file. This could be achieved by a combination of `Fix::insert` and `Fix::delete` (multiple fixes per diagnostic), or by implementing a dedicated `Fix::move`, or perhaps in other ways. For now I've left it out, but keen on hearing what you think would be most consistent with the package, and pointer where to start (if at all).

---

If you care about another testimonial:

`ruff` not only helps staying on top of the many excellent flake8 plugins and other Python code quality tools that are available, it also applies them at baffling speed.

(Planning to implement it soon for github.com/pandas-profiling/pandas-profiling (as largest contributor) and github.com/ing-bank/popmon.)

Rule described here: https://www.flake8rules.com/rules/E101.html

I tried to follow contributing guidelines closely, I've never worked with Rust before. Stumbled across Ruff a few days ago and would like to use it in our project, but we use a bunch of flake8 rules that are not yet implemented in ruff, so I decided to give it a go.

Following up on #2018/#2019 discussion, this moves the readme's development-related bits to `CONTRIBUTING.md` to avoid duplication, and fixes up the commands accordingly 😄

As per Cargo.toml our minimal supported Rust version is 1.65.0, so we

should be using that version in our CI for cargo test and cargo build.

This was apparently accidentally changed in

79ca66ace5.

Previous output for `ruff --explain E711`:

E711 (pycodestyle): Comparison to `None` should be `cond is None`

New output:

none-comparison

Code: E711 (pycodestyle)

Autofix is always available.

Message formats:

* Comparison to `None` should be `cond is None`

* Comparison to `None` should be `cond is not None`

For now, we're just gonna avoid flagging this for `elif` blocks, following the same reasoning as for ternaries. We can handle all of these cases, but we'll knock out the TODOs as a pair, and this avoids broken code.

Closes#2007.

As we surface rule names more to users we want

them to be easier to type than PascalCase.

Prior art:

Pylint and ESLint also use kebab-case for their rule names.

Clippy uses snake_case but only for syntactical reasons

(so that the argument to e.g. #![allow(clippy::some_lint)]

can be parsed as a path[1]).

[1]: https://doc.rust-lang.org/reference/paths.html

This PR adds a new check that turns expressions such as `[1, 2, 3] + foo` into `[1, 2, 3, *foo]`, since the latter is easier to read and faster:

```

~ $ python3.11 -m timeit -s 'b = [6, 5, 4]' '[1, 2, 3] + b'

5000000 loops, best of 5: 81.4 nsec per loop

~ $ python3.11 -m timeit -s 'b = [6, 5, 4]' '[1, 2, 3, *b]'

5000000 loops, best of 5: 66.2 nsec per loop

```

However there's a couple of gotchas:

* This felt like a `simplify` rule, so I borrowed an unused `SIM` code even if the upstream `flake8-simplify` doesn't do this transform. If it should be assigned some other code, let me know 😄

* **More importantly** this transform could be unsafe if the other operand of the `+` operation has overridden `__add__` to do something else. What's the `ruff` policy around potentially unsafe operations? (I think some of the suggestions other ported rules give could be semantically different from the original code, but I'm not sure.)

* I'm not a very established Rustacean, so there's no doubt my code isn't quite idiomatic. (For instance, is there a neater way to write that four-way `match` statement?)

Thanks for `ruff`, by the way! :)

This commit fixes a bug accidentally introduced in

6cf770a692,

which resulted every `ruff --explain <code>` invocation to fail with:

thread 'main' panicked at 'Mismatch between definition and access of `explain`.

Could not downcast to ruff::registry::Rule, need to downcast to &ruff::registry::Rule',

ruff_cli/src/cli.rs:184:18

We also add an integration test for --explain to prevent such bugs from

going by unnoticed in the future.

# This commit has been generated via the following Python script:

# (followed by `cargo +nightly fmt` and `cargo dev generate-all`)

# For the reasoning see the previous commit(s).

import re

import sys

for path in (

'src/violations.rs',

'src/rules/flake8_tidy_imports/banned_api.rs',

'src/rules/flake8_tidy_imports/relative_imports.rs',

):

with open(path) as f:

text = ''

while line := next(f, None):

if line.strip() != 'fn message(&self) -> String {':

text += line

continue

text += ' #[derive_message_formats]\n' + line

body = next(f)

while (line := next(f)) != ' }\n':

body += line

# body = re.sub(r'(?<!code\| |\.push\()format!', 'format!', body)

body = re.sub(

r'("[^"]+")\s*\.to_string\(\)', r'format!(\1)', body, re.DOTALL

)

body = re.sub(

r'(r#".+?"#)\s*\.to_string\(\)', r'format!(\1)', body, re.DOTALL

)

text += body + ' }\n'

while (line := next(f)).strip() != 'fn placeholder() -> Self {':

text += line

while (line := next(f)) != ' }\n':

pass

with open(path, 'w') as f:

f.write(text)

The idea is nice and simple we replace:

fn placeholder() -> Self;

with

fn message_formats() -> &'static [&'static str];

So e.g. if a Violation implementation defines:

fn message(&self) -> String {

format!("Local variable `{name}` is assigned to but never used")

}

it would also have to define:

fn message_formats() -> &'static [&'static str] {

&["Local variable `{name}` is assigned to but never used"]

}

Since we however obviously do not want to duplicate all of our format

strings we simply introduce a new procedural macro attribute

#[derive_message_formats] that can be added to the message method

declaration in order to automatically derive the message_formats

implementation.

This commit implements the macro. The following and final commit

updates violations.rs to use the macro. (The changes have been separated

because the next commit is autogenerated via a Python script.)

ruff_dev::generate_rules_table previously documented which rules are

autofixable via DiagnosticKind::fixable ... since the DiagnosticKind was

obtained via Rule::kind (and Violation::placeholder) which we both want

to get rid of we have to obtain the autofixability via another way.

This commit implements such another way by adding an AUTOFIX

associated constant to the Violation trait. The constant is of the type

Option<AutoFixkind>, AutofixKind is a new struct containing an

Availability enum { Sometimes, Always}, letting us additionally document

that some autofixes are only available sometimes (which previously

wasn't documented). We intentionally introduce this information in a

struct so that we can easily introduce further autofix metadata in the

future such as autofix applicability[1].

[1]: https://doc.rust-lang.org/stable/nightly-rustc/rustc_errors/enum.Applicability.html

While ruff displays the string returned by Violation::message in its

output for detected violations the messages displayed in the README

and in the `--explain <code>` output previously used the

DiagnosticKind::summary() function which for some verbose messages

provided shorter descriptions.

This commit removes DiagnosticKind::summary, and moves the more

extensive documentation into doc comments ... these are not displayed

yet to the user but doing that is very much planned.

This commit series removes the following associated

function from the Violation trait:

fn placeholder() -> Self;

ruff previously used this placeholder approach for the messages it

listed in the README and displayed when invoked with --explain <code>.

This approach is suboptimal for three reasons:

1. The placeholder implementations are completely boring code since they

just initialize the struct with some dummy values.

2. Displaying concrete error messages with arbitrary interpolated values

can be confusing for the user since they might not recognize that the

values are interpolated.

3. Some violations have varying format strings depending on the

violation which could not be documented with the previous approach

(while we could have changed the signature to return Vec<Self> this

would still very much suffer from the previous two points).

We therefore drop Violation::placeholder in favor of a new macro-based

approach, explained in commit 4/5.

Violation::placeholder is only invoked via Rule::kind, so we firstly

have to get rid of all Rule::kind invocations ... this commit starts

removing the trivial cases.

Fixes: #1953

@charliermarsh thank you for the tips in the issue.

I'm not very familiar with Rust, so please excuse if my string formatting syntax is messy.

In terms of testing, I compared output of `flake8 --format=pylint ` and `cargo run --format=pylint` on the same code and the output syntax seems to check out.

Since the UI still relies on the rule codes this improves the developer

experience by letting developers view the code of a Rule enum variant by

hovering over it.

# This commit was automatically generated by running the following

# script (followed by `cargo +nightly fmt`):

import glob

import re

from typing import NamedTuple

class Rule(NamedTuple):

code: str

name: str

path: str

def rules() -> list[Rule]:

"""Returns all the rules defined in `src/registry.rs`."""

file = open('src/registry.rs')

rules = []

while next(file) != 'ruff_macros::define_rule_mapping!(\n':

continue

while (line := next(file)) != ');\n':

line = line.strip().rstrip(',')

if line.startswith('//'):

continue

code, path = line.split(' => ')

name = path.rsplit('::')[-1]

rules.append(Rule(code, name, path))

return rules

code2name = {r.code: r.name for r in rules()}

for pattern in ('src/**/*.rs', 'ruff_cli/**/*.rs', 'ruff_dev/**/*.rs', 'scripts/add_*.py'):

for name in glob.glob(pattern, recursive=True):

with open(name) as f:

text = f.read()

text = re.sub('Rule(?:Code)?::([A-Z]\w+)', lambda m: 'Rule::' + code2name[m.group(1)], text)

text = re.sub(r'(?<!"<FilePattern>:<)RuleCode\b', 'Rule', text)

text = re.sub('(use crate::registry::{.*, Rule), Rule(.*)', r'\1\2', text) # fix duplicate import

with open(name, 'w') as f:

f.write(text)

This commit series refactors ruff to decouple "rules" from "rule codes",

in order to:

1. Make our code more readable by changing e.g.

RuleCode::UP004 to Rule::UselessObjectInheritance.

2. Let us cleanly map multiple codes to one rule, for example:

[UP004] in pyupgrade, [R0205] in pylint and [PIE792] in flake8-pie

all refer to the rule UselessObjectInheritance but ruff currently

only associates that rule with the UP004 code (since the

implementation was initially modeled after pyupgrade).

3. Let us cleanly map one code to multiple rules, for example:

[C0103] from pylint encompasses N801, N802 and N803 from pep8-naming.

The latter two steps are not yet implemented by this commit series

but this refactoring enables us to introduce such a mapping. Such a

mapping would also let us expand flake8_to_ruff to support e.g. pylint.

After the next commit which just does some renaming the following four

commits remove all trait derivations from the Rule (previously RuleCode)

enum that depend on the variant names to guarantee that they are not

used anywhere anymore so that we can rename all of these variants in the

eigth and final commit without breaking anything.

While the plan very much is to also surface these human-friendly names

more in the user interface this is not yet done in this commit series,

which does not change anything about the UI: it's purely a refactor.

[UP004]: pyupgrade doesn't actually assign codes to its messages.

[R0205]: https://pylint.pycqa.org/en/latest/user_guide/messages/refactor/useless-object-inheritance.html

[PIE792]: https://github.com/sbdchd/flake8-pie#pie792-no-inherit-object

[C0103]: https://pylint.pycqa.org/en/latest/user_guide/messages/convention/invalid-name.html

This is slightly buggy due to Instagram/LibCST#855; it will complain `[ERROR] Failed to fix nested with: Failed to extract CST from source` when trying to fix nested parenthesized `with` statements lacking trailing commas. But presumably people who write parenthesized `with` statements already knew that they don’t need to nest them.

Signed-off-by: Anders Kaseorg <andersk@mit.edu>

If a `try` block has multiple statements, a compound statement, or

control flow, rewriting it with `contextlib.suppress` would obfuscate

the fact that the exception still short-circuits further statements in

the block.

Fixes#1947.

Signed-off-by: Anders Kaseorg <andersk@mit.edu>

Since our binding tracking is somewhat limited, I opted to favor false negatives over false positives. So, e.g., this won't trigger SIM115:

```py

with contextlib.ExitStack():

f = exit_stack.enter_context(open("filename"))

```

(Notice that `exit_stack` is unbound.)

The alternative strategy required us to incorrectly trigger SIM115 on this:

```py

with contextlib.ExitStack() as exit_stack:

exit_stack_ = exit_stack

f = exit_stack_.enter_context(open("filename"))

```

Closes#1945.

The Settings struct previously contained the fields:

pub enabled: HashableHashSet<RuleCode>,

pub fixable: HashableHashSet<RuleCode>,

This commit merges both fields into one by introducing a new

RuleTable type, wrapping HashableHashMap<RuleCode, bool>,

which has the following benefits:

1. It makes the invalid state that a rule is

disabled but fixable unrepresentable.

2. It encapsulates the implementation details of the table.

(It currently uses an FxHashMap but that may change.)

3. It results in more readable code.

settings.rules.enabled(rule)

settings.rules.should_fix(rule)

is more readable than:

settings.enabled.contains(rule)

settings.fixable.contains(rule)

I accept any suggestion. By the way, I have a doubt, I have checked and all flake8-pie plugins can be fixed by ruff, but is it necessary that this one is also fixed automatically ?

rel #1543

The idea is to follow the Rust naming convention for lints[1]:

> the lint name should make sense when read as

> "allow lint-name" or "allow lint-name items"

Following that convention prefixing "Banned" is

redundant as it could be prefixed to any lint name.

[1]: https://rust-lang.github.io/rfcs/0344-conventions-galore.html#lints

The caching mechanism of the CLI (ruff_cli::cache) relies on

ruff::settings::Settings implementing the Hash trait.

The ruff::settings::Settings struct previously couldn't automatically

derive the Hash implementation via the #[derive(Hash)] macro attribute

since some of its field types intentionally[1][2] don't implement Hash

(namely regex::Regex, globset::GlobMatcher and globset::GlobSet and

HashMap and HashSet from the standard library).

The code therefore previously implemented the Hash trait by hand for the

whole struct. Implementing Hash by hand for structs that are subject to

change is a bad idea since it's very easy to forget to update the Hash

implementation when adding a new field to the struct. And the Hash

implementation indeed was already incorrect by omitting several fields

from the hash.

This commit introduces wrapper types for Regex, GlobMatcher, GlobSet,

HashSet & HashMap that implement Hash so that we can still add

#[derive(Hash)] to the Settings struct, guaranteeing a correct hash

implementation.

[1]: https://github.com/rust-lang/regex/issues/364#issuecomment-301082076

[2]: The standard library doesn't impl<T: Hash + Ord> Hash for HashSet<T>

presumably since sorted() requires an allocation and Hash

implementations are generally expected to work without allocations.

We want to automatically derive Hash for the library settings, which

requires us to split off all the settings unused by the library

(since these shouldn't affect the hash used by ruff_cli::cache).

Implements [flake8-commas](https://github.com/PyCQA/flake8-commas). Fixes#1058.

The plugin is mostly redundant with Black (and also deprecated upstream), but very useful for projects which can't/won't use an auto-formatter.

This linter works on tokens. Before porting to Rust, I cleaned up the Python code ([link](https://gist.github.com/bluetech/7c5dcbdec4a73dd5a74d4bc09c72b8b9)) and made sure the tests pass. In the Rust version I tried to add explanatory comments, to the best of my understanding of the original logic.

Some changes I did make:

- Got rid of rule C814 - "missing trailing comma in Python 2". Ruff doesn't support Python 2.

- Merged rules C815 - "missing trailing comma in Python 3.5+" and C816 - "missing trailing comma in Python 3.6+" into C812 - "missing trailing comma". These Python versions are outdated, didn't think it was worth the complication.

- Added autofixes for C812 and C819.

Autofix is missing for C818 - "trailing comma on bare tuple prohibited". It needs to turn e.g. `x = 1,` into `x = (1, )`, it's a bit difficult to do with tokens only, so I skipped it for now.

I ran the rules on cpython/Lib and on a big internal code base and it works as intended (though I only sampled the diffs).

The primary motivation is that we can now robustly detect `\` continuations due to the addition of `Tok::NonLogicalNewline`. This PR generalizes the approach we took to comments (track all lines that contain any comments), and applies it to continuations too.

define_rule_mapping! was previously implemented as a declarative macro,

which was however partially relying on an origin_by_code! proc macro

because declarative macros cannot match on substrings of identifiers.

Currently all define_rule_mapping! lines look like the following:

TID251 => violations::BannedApi,

TID252 => violations::BannedRelativeImport,

We want to break up violations.rs, moving the violation definitions to

the respective rule modules. To do this we want to change the previous

lines to:

TID251 => rules::flake8_tidy_imports::banned_api::BannedApi,

TID252 => rules::flake8_tidy_imports::relative_imports::RelativeImport,

This however doesn't work because the define_rule_mapping! macro is

currently defined as:

($($code:ident => $mod:ident::$name:ident,)+) => { ... }

That is it only supported $module::$name but not longer paths with

multiple modules. While we could define `=> $path:path`[1] then we

could no longer access the last path segment, which we need because

we use it for the DiagnosticKind variant names. And

`$path:path::$last:ident` doesn't work either because it would be

ambiguous (Rust wouldn't know where the path ends ... so path fragments

have to be followed by some punctuation/keyword that may not be part of

paths). And we also cannot just introduce a procedural macro like

path_basename!(...) because the following is not valid Rust code:

enum Foo { foo!(...), }

(macros cannot be called in the place where you define variants.)

So we have to convert define_rule_mapping! into a proc macro in order to

support paths of arbitrary length and this commit implements that.

[1]: https://doc.rust-lang.org/reference/macros-by-example.html#metavariables

Before

```

resources/test/fixtures/flake8_simplify/SIM208.py:1:13: SIM208 Use `a` instead of `not (not a)`

|

1 | if not (not a): # SIM208

| ^ SIM208

|

= help: Replace with `a`

resources/test/fixtures/flake8_simplify/SIM208.py:4:14: SIM208 Use `a == b` instead of `not (not a == b)`

|

4 | if not (not (a == b)): # SIM208

| ^^^^^^ SIM208

|

= help: Replace with `a == b`

```

After

```

resources/test/fixtures/flake8_simplify/SIM208.py:1:4: SIM208 Use `a` instead of `not (not a)`

|

1 | if not (not a): # SIM208

| ^^^^^^^^^^^ SIM208

|

= help: Replace with `a`

resources/test/fixtures/flake8_simplify/SIM208.py:4:4: SIM208 Use `a == b` instead of `not (not a == b)`

|

4 | if not (not (a == b)): # SIM208

| ^^^^^^^^^^^^^^^^^^ SIM208

|

= help: Replace with `a == b`

```

This makes it easier to see which rules you're enabling when selecting

one of the pylint codes (like `PLC`). This also makes it clearer what

those abbreviations stand for. When I first saw the pylint section, I

was very confused by that, so other might be as well.

See it rendered here:

https://github.com/thomkeh/ruff/blob/patch-1/README.md#pylint-plc-ple-plr-plw

This bumps RustPython so we can use the new `NonLogicalNewline` token.

A couple of rules needed a fix due to the new token. There might be more

that are not caught by tests (anything working with tokens directly with

lookaheads), I hope not.

This PR makes the following changes to improve `SIM117`:

- Avoid emitting `SIM117` multiple times within the same `with`

statement:

- Adjust the error range.

## Example

```python

with A() as a: # SIM117

with B() as b:

with C() as c:

print("hello")

```

### Current

```

resources/test/fixtures/flake8_simplify/SIM117.py:5:1: SIM117 Use a single `with` statement with multiple contexts instead of nested `with` statements

|

5 | / with A() as a: # SIM117

6 | | with B() as b:

7 | | with C() as c:

8 | | print("hello")

| |__________________________^ SIM117

|

resources/test/fixtures/flake8_simplify/SIM117.py:6:5: SIM117 Use a single `with` statement with multiple contexts instead of nested `with` statements

|

6 | with B() as b:

| _____^

7 | | with C() as c:

8 | | print("hello")

| |__________________________^ SIM117

|

```

### Improved

```

resources/test/fixtures/flake8_simplify/SIM117.py:5:1: SIM117 Use a single `with` statement with multiple contexts instead of nested `with` statements

|

5 | / with A() as a: # SIM117

6 | | with B() as b:

7 | | with C() as c:

| |______________________^ SIM117

|

```

Signed-off-by: harupy <hkawamura0130@gmail.com>

I initially attempted to run `wasm-pack build -p ruff` which gave the

error message:

Error: crate directory is missing a `Cargo.toml` file; is `-p` the wrong

directory?

I interpreted that as wasm-pack looking for the "ruff" directory because

I specified -p ruff, however actually the wasm-pack build usage is:

wasm-pack build [FLAGS] [OPTIONS] <path> <cargo-build-options>

And I was missing the `<path>` argument. So this actually wasn't at all

a bug in wasm-pack but just a confusing error message. And the symlink

hack I introduced in the previous commit didn't actually work ... I only

accidentally omitted the `-p` when testing (which ended up as `ruff`

being the <path> argument) ... CLIs are fun.

This lets you test the ruff linters or use the ruff library

without having to compile the ~100 additional dependencies

that are needed by the CLI.

Because we set the following in the [workspace] section of Cargo.toml:

default-members = [".", "ruff_cli"]

`cargo run` still runs the CLI and `cargo test` still tests

the code in src/ as well as the code in the new ruff_cli crate.

(But you can now also run `cargo test -p ruff` to only test the linters.)

This PR refactors our import-tracking logic to leverage our existing

logic for tracking bindings. It's both a significant simplification, a

significant improvement (as we can now track reassignments), and closes

out a bunch of subtle bugs.

Though the AST tracks all bindings (e.g., when parsing `import os as

foo`, we bind the name `foo` to a `BindingKind::Importation` that points

to the `os` module), when I went to implement import tracking (e.g., to

ensure that if the user references `List`, it's actually `typing.List`),

I added a parallel system specifically for this use-case.

That was a mistake, for a few reasons:

1. It didn't track reassignments, so if you had `from typing import

List`, but `List` was later overridden, we'd still consider any

reference to `List` to be `typing.List`.

2. It required a bunch of extra logic, include complex logic to try and

optimize the lookups, since it's such a hot codepath.

3. There were a few bugs in the implementation that were just hard to

correct under the existing abstractions (e.g., if you did `from typing

import Optional as Foo`, then we'd treat any reference to `Foo` _or_

`Optional` as `typing.Optional` (even though, in that case, `Optional`

was really unbound).

The new implementation goes through our existing binding tracking: when

we get a reference, we find the appropriate binding given the current

scope stack, and normalize it back to its original target.

Closes#1690.

Closes#1790.

Non-basename glob matches (e.g., for `--per-file-ignores`) assume that

the path has been converted to an absolute path. (We do this for

filenames as part of the directory traversal.) For filenames passed via

stdin, though, we're missing this conversion. So `--per-file-ignores`

that rely on the _basename_ worked as expected, but directory paths did

not.

Closes#1840.

This PR adds support for `SIM110` and `SIM111` simplifications of the

form:

```py

def f():

# SIM110

for x in iterable:

if check(x):

return True

else:

return False

```

This PR implements `reverse-relative`, from isort, but renames it to

`relative-imports-order` with the respected value `closest-to-furthest`

and `furthest-to-closest`, and the latter being the default.

Closes#1813.

This PR implements `W505` (`DocLineTooLong`), which is similar to `E501`

(`LineTooLong`) but confined to doc lines.

I based the "doc line" definition on pycodestyle, which defines a doc

line as a standalone comment or string statement. Our definition is a

bit more liberal, since we consider any string statement a doc line

(even if it's part of a multi-line statement) -- but that seems fine to

me.

Note that, unusually, this rule requires custom extraction from both the

token stream (to find standalone comments) and the AST (to find string

statements).

Closes#1784.

Fixes#1775. Before implementing your solution I thought of a slightly

simpler one. However, it will let this function pass:

```

def double_inside_single(a):

'Double inside "single "'

```

If we want function to pass, my implementation works. But if we do not,

then I can go with how you suggested I implemented this (I left how I

would begin to handle it commented out). The bottom of the flake8-quotes

documentation seems to suggest that this should pass:

https://pypi.org/project/flake8-quotes/

Co-authored-by: Charlie Marsh <charlie.r.marsh@gmail.com>

Ref #998

- Implements SIM401 with fix

- Added tests

Notes:

- only recognize simple ExprKind::Name variables in expr patterns for

now

- bug-fix from reference implementation: check 3-conditions (dict-key,

target-variable, dict-name) to be equal, `flake8_simplify` only test

first two (only first in second pattern)

We now skip SIM108 violations if: the resulting statement would exceed

the user-specified line length, or the `if` statement contains comments.

Closes#1719.

Closes#1766.

When checking changes in the 0.0.218 release I noticed that auto fixing

PT004 and PT005 was disabled but this change was not reflected in

README. So I create this small PR to do this.

Co-authored-by: Charlie Marsh <charlie.r.marsh@gmail.com>

We now: (1) trigger PIE794 for objects without bases (not sure why this

was omitted before); and (2) remove the entire line, rather than leaving

behind trailing whitespace.

Resolves#1787.

A part of #827. Posting this for visibility. Still has some work to do

to be done.

Things that still need done before this is ready:

- [x] Does not work when the item is being assigned to a variable

- [x] Does not work if being used in a function call

- [x] Fix incorrectly removed calls in the function

- [x] Has not been tested with pyupgrade negative test cases

Tests from pyupgrade can be seen here:

https://github.com/asottile/pyupgrade/blob/main/tests/features/format_literals_test.py

Co-authored-by: Charlie Marsh <charlie.r.marsh@gmail.com>

In isort, this is called `add-imports`, but I prefer the declarative

name.

The idea is that by adding the following to your `pyproject.toml`, you

can ensure that the import is included in all files:

```toml

[tool.ruff.isort]

required-imports = ["from __future__ import annotations"]

```

I mostly reverse-engineered isort's logic for making decisions, though I

made some slight tweaks that I think are preferable. A few comments:

- Like isort, we don't enforce this on empty files (like empty

`__init__.py`).

- Like isort, we require that the import is at the top-level.

- isort will skip any docstrings, and any comments on the first three

lines (I think, based on testing). Ruff places the import after the last

docstring or comment in the file preamble (that is: after the last

docstring or comment that comes before the _first_ non-docstring and

non-comment).

Resolves#1700.

We don't have any doctests, but `cargo test --all` spends more than half

the time on doctests? A little confusing, but this brings the test time

from > 4s to < 2s on my machine.

This commit is a first attempt at addressing issue #1003.

The default `isort` behavior is `force-sort-within-sections = false`,

which places `from X import Y` statements after `import X` statements.

When `force-sort-within-sections = true` all imports are sorted by

module name.

When module names are equivalent, the `import` statement comes before

the `from` statement.

I ran the following code in Python 3.10 to automatically generate a list

of enums.

```python

import unittest

print(

",\n".join(

sorted(

m.removeprefix("assert") if m != "assert_" else "Underscore"

for m in dir(unittest.TestCase)

if m.startswith("assert")

)

)

)

```

The changes in this commit were generated by running:

for f in $(find src -name '*.rs'); do sed -Ei 's/use crate::registry::.*;/\0use crate::violations;/g' $f; done

for f in $(find src -name '*.rs'); do sed -Ei 's/CheckKind::([A-Z])/violations::\1/g' $f; done

git checkout src/registry.rs src/lib.rs src/lib_wasm.rs src/violations.rs

cargo +nightly fmt

For every available rule registry.rs currently defines:

1. A CheckCode variant to identify the rule.

2. A CheckKind variant to represent violations of the rule.